Chain of Thought Prompting

Chain of Thought Prompting also known as COT is a way to enhance the reasoning and problem-solving abilities of Large Language Models (LLM).

It helps guide the LLM through a step-by-step process to arrive at a final result. It’s like showing your work on a math problem. This is done by breaking down the prompt into smaller sections which are solved one at a time. By prompting in this fashion, your language models can generate more accurate and coherent responses, especially for complex queries.

In this article, we are going to cover 5 different examples of COT Prompting for both ChatGPT as well as the OpenAI API. These examples include: Zero-Shot, One-Shot, Few-Shot, Automatic, and Multimodal

If you do not want to read the article, I have a full YouTube video going over each of the examples provided in the article.Â

Also, if you are looking for any work that deals with Large Language Models, hit us up over email or the contact form on the website.

If you came here for the ChatGPT examples, feel free to skip this section. But if you want to code, please follow these steps.

For the tutorial you are going have to pip install langchain, openai, and langchain_openai

!pip install langchain!pip install openai!pip install langchain_openaiOnce these are installed, you can now import in OpenAI, FewShotPromptTemplate, PromptTemplate, and ChatOpenAI

from langchain_openai import OpenAIfrom langchain.prompts.few_shot import FewShotPromptTemplatefrom langchain.prompts.prompt import PromptTemplatefrom langchain_openai import ChatOpenAILastly

import osos.environ["OPENAI_API_KEY"] = ""Zero-Shot Chain-of-Thought prompting

Chat GPT Example

From the prompt above, what makes this a chain of thought is the last line: Explain your answer step by step.

Below is the results when the prompt is fed to ChatGPT

OpenAI API Example

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

llm = ChatOpenAI(model="gpt-3.5-turbo", temperature=0)

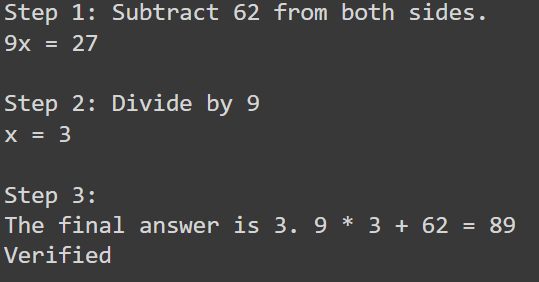

question = "Can you solve the following math question? Please show how you got the answer: 9x + 62 = 89"From the prompt above, what makes this a chain of thought is the last line: Please show how you got the answer.

output = llm.invoke(question)

print(output) Below is the results we will get

To solve the equation 9x + 62 = 89, we need to isolate the variable x.

First, subtract 62 from both sides of the equation:9x + 62 – 62 = 89 – 62

9x = 27

Next, divide both sides by 9 to solve for x:

9x / 9 = 27 / 9

x = 3

Therefore, the solution to the equation 9x + 62 = 89 is x = 3.’

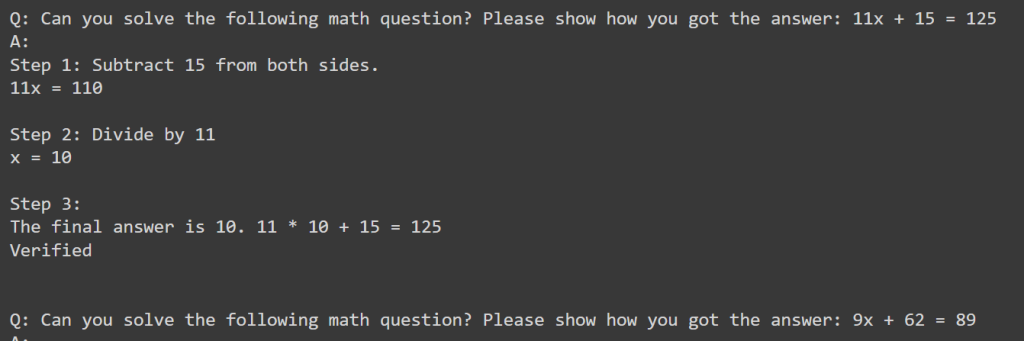

One-Shot Chain-of-Thought prompting

For one-shot prompting, we add in one example. You can see that we have a Q and A.

In this example we go over a slight variation of the question we want answered.Â

OpenAI API Example 1

We are going to take a look at two different examples with the OpenAI Api. The first is what I call the lazy way.Â

Just use your prompt with an additional example and send it to the large language model.

one_shot_prompt = """Q: Can you solve the following math question? Please show how you got the answer: 11x + 15 = 125A:

Step 1: Subtract 15 from both sides.11x = 110

Step 2: Divide by 11x = 10

Step 3:The final answer is 10. 11 * 10 + 15 = 125Verified

Q: Can you solve the following math question? Please show how you got the answer: 9x + 62 = 89A:"""

output = llm.invoke(few_shot_prompt)print(output)OpenAI API Example 2

This is the better approach to take. It correctly lays out all the steps to prompting with examples.

example = [  {    "Question": "Can you solve the following math question? Please show how you got the answer: 11x + 15 = 125",    "Solution": """Step 1: Subtract 15 from both sides.11x = 110

Step 2: Divide by 11x = 10

Step 3:The final answer is 10. 11 * 10 + 15 = 125Verified    """  }]After an example is set up, we want to create an example_prompt.

example_prompt = PromptTemplate(  input_variables=["Question", "Solution"],  template="Q: {Question}\nA: {Solution}")After we can create a few shot prompt template.

one_shot_prompt = FewShotPromptTemplate(  examples=example,  example_prompt=example_prompt,  suffix="Q: Can you solve the following math question? Please show how you got the answer: {input}\nA:",  input_variables=["input"],)  input_variables=["Question", "Solution"],  template="Q: {Question}\nA: {Solution}")If you want to see how your prompt looks like before being sent to the model, use the following code.

formatted_prompt = one_shot_prompt.format(input="9x + 62 = 89")print(formatted_prompt)

response = llm.invoke(formatted_prompt)print(response.content)

Few Shot Chain-of-Thought prompting

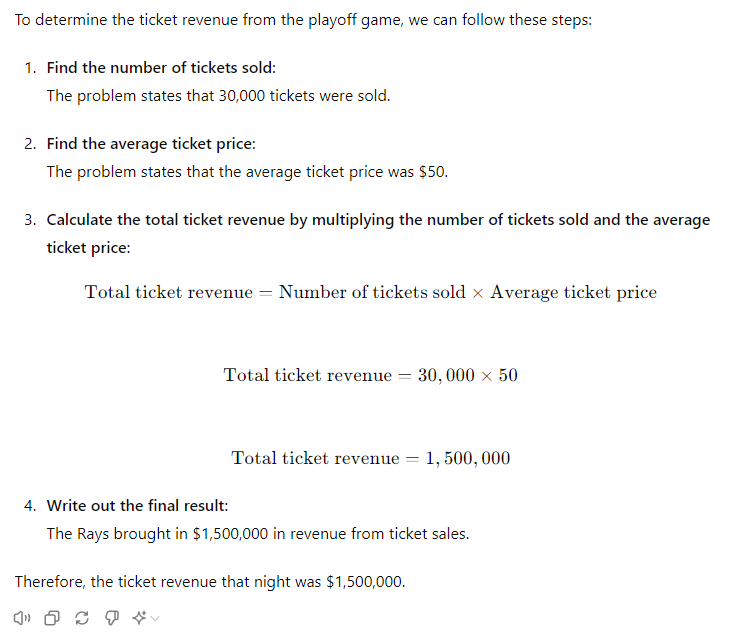

Few shot is when you give 2+ examples. Let’s take a look at the ChatGPT band setlist and math problem one more time.

Chat GPT Example

In the example below, we add the addition of the musician performing 25 songs at a concert.

OpenAI API Example (Better Example)

examples = [  {    "Question": "Can you solve the following math question? Please show how you got the answer: 11x + 15 = 125",    "Solution": """Step 1: Subtract 15 from both sides.11x = 110

Step 2: Divide by 11x = 10

Step 3:The final answer is 10. 11 * 10 + 15 = 125Verified    """  },  {    "Question": "Can you solve the following math question? Please show how you got the answer: 4x - 7 =21",    "Solution": """Step 1: Add 7 to both sides.4x = 28

Step 2: Divide by 4x = 7

Step 3:The final answer is 4. 4 * 7 - 7 = 21Verified    """  }, ]

example_prompt = PromptTemplate(  input_variables=["Question", "Solution"],  template="Q: {Question}\nA: {Solution}")

few_shot_prompt = FewShotPromptTemplate(  examples=example,  example_prompt=example_prompt,  suffix="Q: Can you solve the following math question? Please show how you got the answer: {input}\nA:",  input_variables=["input"],) response = llm.invoke(formatted_prompt)

print(response.content)Automatic chain of thought (Auto-CoT)

With Auto COT you are constantly asking questions to get to an answer. This is done through an example as well so think of it as an extension of a one or few shot prompt.

ChatGPT Example

OpenAI API Example

cot_prompt = """Q: Can you solve the following math question? Please show how you got the answer: 11x + 15 = 125

Can you Identify the equation used:11x + 15 = 125

How can you isolate X?Subtract 15 from each side

Can you perform the operation11x = 110

How can you get x to be by itself?divide by 11

Can you perform the operationx = 10

Is this the final answer? Check again to make sure11(10) + 15 = 125

Confirmed

Q: Can you solve the following math question? Please show how you got the answer: 9x + 62 = 89

"""

output = llm.invoke(vot_prompt)OpenAI API Example

examples = [  {    "Question": "Can you solve the following math question? Please show how you got the answer: 11x + 15 = 125",    "Solution": """Identify the equation used:11x + 15 = 125

How can you isolate X?Subtract 15 from each side

Perform the operation11x = 110

How can you get x to be by itself?divide by 11

Perform the operationx = 10

Is this the final answer? Check again to make sure11(10) + 15 = 125

Confirmed    """  }, ]

example_prompt = PromptTemplate(  input_variables=["Question", "Solution"],  template="Q: {Question}\nA: {Solution}")

few_shot_prompt = FewShotPromptTemplate(  examples=example,  example_prompt=example_prompt,  suffix="Q: Can you solve the following math question? Please show how you got the answer: {input}\nA:",  input_variables=["input"],)

formatted_prompt = few_shot_prompt.format(input="9x + 62 = 89")Multimodal Chain-of-Thought prompting

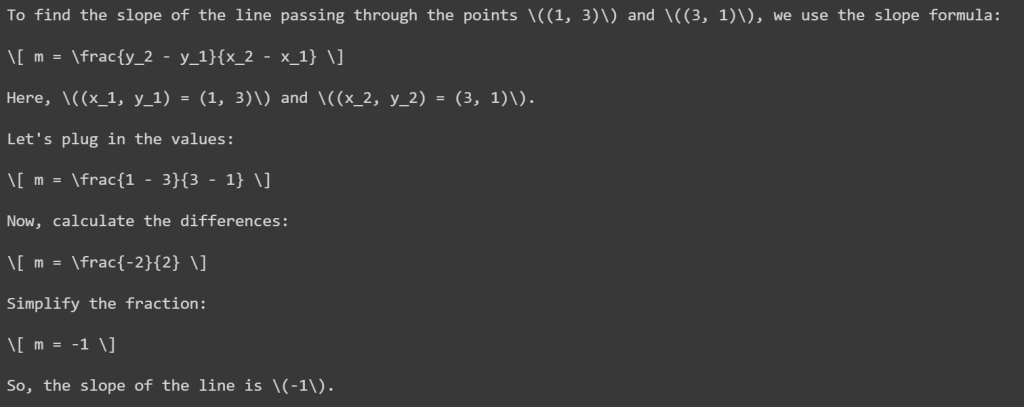

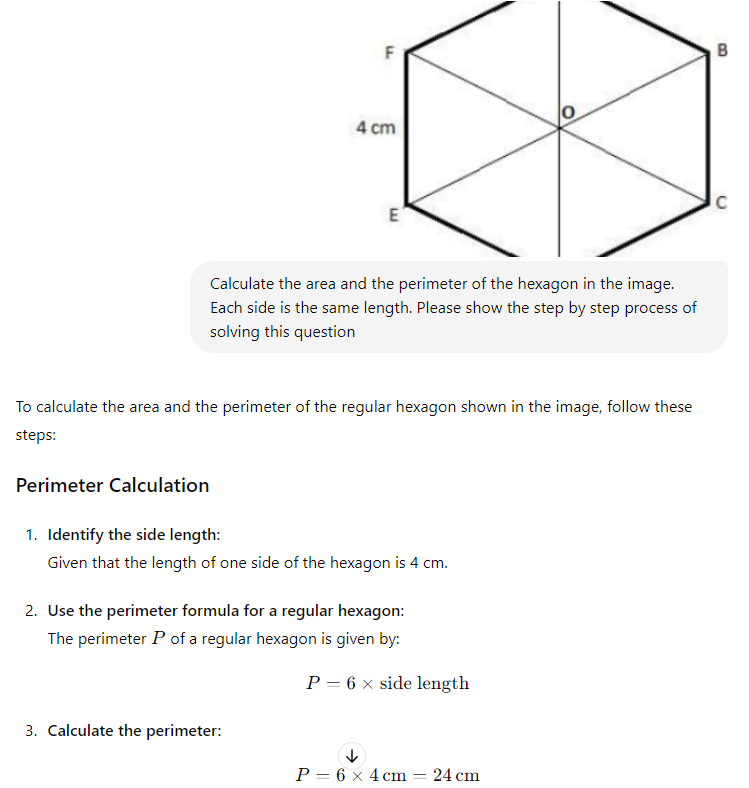

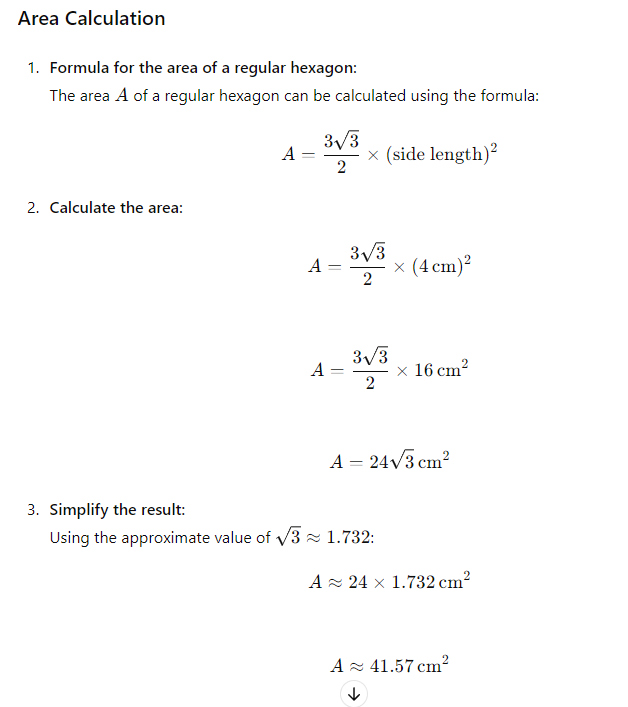

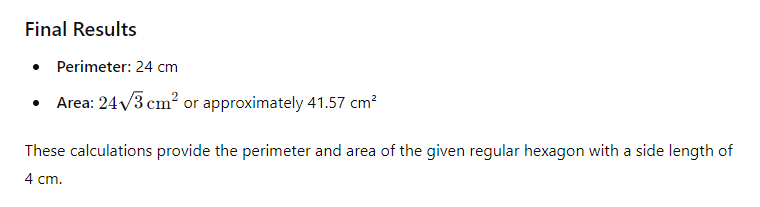

Multimodal prompting is when you use both images and text. We can essentially use an image with a zero-shot prompt to achieve a solution.

ChatGPT Example

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

OpenAI API Example

MODEL="gpt-4o"client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY"))

from IPython.display import Image, display, Audio, Markdownimport base64

IMAGE_PATH = "/content/slope_example.png"

# Preview image for contextdisplay(Image(IMAGE_PATH))

def encode_image(image_path):Â Â with open(image_path, "rb") as image_file:Â Â Â Â return base64.b64encode(image_file.read()).decode("utf-8")

base64_image = encode_image(IMAGE_PATH)

response = client.chat.completions.create(  model=MODEL,  messages=[    {"role": "system", "content": "You are a math assitant who can solve algebra problems. You show every step taken"},    {"role": "user", "content": [      {"type": "text", "text": "I'm going to provide you an image of a graph. Please breakdown the process of finding the slop and confirm the answer"},      {"type": "image_url", "image_url": {        "url": f"data:image/png;base64,{base64_image}"}      }    ]}  ],  temperature=0.0,)

print(response.choices[0].message.content)